In episode 1, Clemens introduced the focus of Visual Studio Team System 2010 on the collaborative effort between the tester and the other roles in the application lifecycle. In this second episode I will start with a short introduction on testing.

Testing is not an aim in itself! Testing is, in fact, a balancing act. What risks must be covered, what results are to be delivered and how much time and money can be spend, based on rational and economic grounds?

The right test strategy will balance among risks and costs .

Testing supplies insight in the difference between the actual and the required status of an object. Where quality is roughly to be described as 'meeting the requirements and expectations', testing delivers information on the quality.

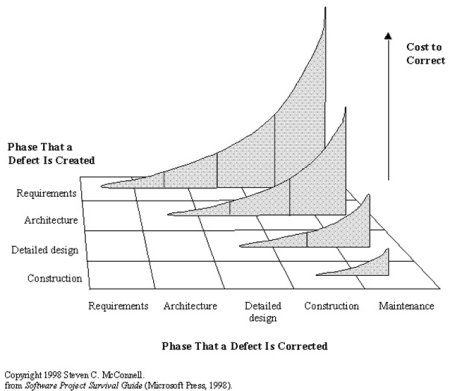

In this, there is no difference for developers who are testing, specialist testers during system testing or generalist testers during the final acceptance test. Choosing the right test strategy is a joined effort between every tester and the other roles in the application lifecycle. This collaboration is needed because, beside insight in the business risks, much (technical and test) knowledge is needed to find the most important defects as early as possible at the lowest price.

The collaboration doesn't stop after choosing the best test strategy. After designing the test cases, also test execution must be organized as a joined effort. Collaboration between the different roles in the application lifecycle is not self-evident. By nature, it looks like developers and testers don't (want to) understand each other. Having this attitude, it is difficult to find out you need each other to deliver software of good quality.

...don’t (want to) understand each other.

The consequence of this virtual (and sometimes real) wall is calamitous for quality. Many bugs arise by doing wrong assumptions and interpretations of the, in generally, unclear specifications and requirements. Reporting, analyzing and resolving these bugs take a lot of time, especially when they prove to be not reproducible or wrong.

An Example: After a development project of 5 man year, testing is done by a team of generalist testers. With much enthusiasm the test team executes the test cases they designed during the building of the system. After 3 months the verdict is given: Negative release advice, because of (8) blocking and (22) big defects, the test team gives the advice NOT to go into production. As you may understand this was a big disappointment for both the project team as the business department. A taskforce was established to keep the delay (damage) in control. After the defect analysis the general feeling became better, after filtering the test faults (20%), the functional wishes (20%) and changes (not passed through to the testers; 30%) the repair costs were approximately 200 hours ( for the remaining 30%). In fact the development team had done a great job. Better communication from both sides, before and during test execution, probably had made the verdict a, celebration worthy, positive release advice. Much, much better than this cold shower!

The above paragraphs show that the collaboration between the tester and developer is important for success. If the collaboration between the different roles in the application lifecycle is not actively stimulated and facilitated, the proverbial wall will arise.

In the next episode Clemens shows us how Visual Studio Team System 2010 will support and stimulate this collaboration...

Testing is not an aim in itself! Testing is, in fact, a balancing act. What risks must be covered, what results are to be delivered and how much time and money can be spend, based on rational and economic grounds?

The right test strategy will balance among risks and costs .

Testing supplies insight in the difference between the actual and the required status of an object. Where quality is roughly to be described as 'meeting the requirements and expectations', testing delivers information on the quality.

In this, there is no difference for developers who are testing, specialist testers during system testing or generalist testers during the final acceptance test. Choosing the right test strategy is a joined effort between every tester and the other roles in the application lifecycle. This collaboration is needed because, beside insight in the business risks, much (technical and test) knowledge is needed to find the most important defects as early as possible at the lowest price.

The collaboration doesn't stop after choosing the best test strategy. After designing the test cases, also test execution must be organized as a joined effort. Collaboration between the different roles in the application lifecycle is not self-evident. By nature, it looks like developers and testers don't (want to) understand each other. Having this attitude, it is difficult to find out you need each other to deliver software of good quality.

...don’t (want to) understand each other.

The consequence of this virtual (and sometimes real) wall is calamitous for quality. Many bugs arise by doing wrong assumptions and interpretations of the, in generally, unclear specifications and requirements. Reporting, analyzing and resolving these bugs take a lot of time, especially when they prove to be not reproducible or wrong.

An Example: After a development project of 5 man year, testing is done by a team of generalist testers. With much enthusiasm the test team executes the test cases they designed during the building of the system. After 3 months the verdict is given: Negative release advice, because of (8) blocking and (22) big defects, the test team gives the advice NOT to go into production. As you may understand this was a big disappointment for both the project team as the business department. A taskforce was established to keep the delay (damage) in control. After the defect analysis the general feeling became better, after filtering the test faults (20%), the functional wishes (20%) and changes (not passed through to the testers; 30%) the repair costs were approximately 200 hours ( for the remaining 30%). In fact the development team had done a great job. Better communication from both sides, before and during test execution, probably had made the verdict a, celebration worthy, positive release advice. Much, much better than this cold shower!

The above paragraphs show that the collaboration between the tester and developer is important for success. If the collaboration between the different roles in the application lifecycle is not actively stimulated and facilitated, the proverbial wall will arise.

In the next episode Clemens shows us how Visual Studio Team System 2010 will support and stimulate this collaboration...

( 3 / 2235 )

( 3 / 2235 )

Application Lifecycle Management [ALM] should ensure that an organization experiences an improved "business as usual" in the event of the implementation of new and/or changed functionality. Other (older) industries can give assurance, so the ICT industry should follow (soon?). This article will, I hope, give some next steps towards adulthood, by giving [ALM] directions how to prevent costly or even lethal disasters caused by bugs.

Application Lifecycle Management [ALM] should ensure that an organization experiences an improved "business as usual" in the event of the implementation of new and/or changed functionality. Other (older) industries can give assurance, so the ICT industry should follow (soon?). This article will, I hope, give some next steps towards adulthood, by giving [ALM] directions how to prevent costly or even lethal disasters caused by bugs.  Business Continuity has for [ALM] two viewpoints:

Business Continuity has for [ALM] two viewpoints: How to prevent a major disruption caused by the implementation of a new or changed application?

How to prevent a major disruption caused by the implementation of a new or changed application?  Pillar 1: Finding Bugs AEAP (As Early As Possibly)

Pillar 1: Finding Bugs AEAP (As Early As Possibly)  Testing Requirements

Testing Requirements Collaboration is a critical success factor in preventing a major disruption caused by the implementation of a new or changed application! All parties within [ALM] have to work together in creating good test coverage from the early until the last phases of the projects. I am sure that only when the Quality Levels, Learning Cycles and Metrics are in place, a good Business Continuity risk advice can be given to ensure that an organization experiences an improved "business as usual" in the event of the implementation of new and/or changed functionality.

Collaboration is a critical success factor in preventing a major disruption caused by the implementation of a new or changed application! All parties within [ALM] have to work together in creating good test coverage from the early until the last phases of the projects. I am sure that only when the Quality Levels, Learning Cycles and Metrics are in place, a good Business Continuity risk advice can be given to ensure that an organization experiences an improved "business as usual" in the event of the implementation of new and/or changed functionality.

Calendar

Calendar