Application Lifecycle Management [ALM] should ensure that an organization experiences an improved "business as usual" in the event of the implementation of new and/or changed functionality. Other (older) industries can give assurance, so the ICT industry should follow (soon?). This article will, I hope, give some next steps towards adulthood, by giving [ALM] directions how to prevent costly or even lethal disasters caused by bugs.

Application Lifecycle Management [ALM] should ensure that an organization experiences an improved "business as usual" in the event of the implementation of new and/or changed functionality. Other (older) industries can give assurance, so the ICT industry should follow (soon?). This article will, I hope, give some next steps towards adulthood, by giving [ALM] directions how to prevent costly or even lethal disasters caused by bugs.  Business Continuity has for [ALM] two viewpoints:

Business Continuity has for [ALM] two viewpoints:- [ALM] is part of the interdisciplinary concept, called Business Continuity Planning (BCP), used to create and validate a practiced logistical plan for how an organization will recover and restore partially or completely interrupted critical function(s) within a predetermined time after a disaster or extended disruption (see wikipedia for more information),

- [ALM] must give assurance that developing and/or changing applications won't create disasters or extended disruptions! (I won't explain what software bugs can accomplish...).

In this entry I will give some thoughts concerning the second viewpoint:

How to prevent a major disruption caused by the implementation of a new or changed application?

How to prevent a major disruption caused by the implementation of a new or changed application? Of Course, the Acceptance Test is very important in our "battle" to prevent the worst to happen. But, as I stated earlier in my article "What's in the Box?", the Acceptance Test alone is not enough! Based on the Business Risks (Business Driven Test Management *) the Master Test strategy in [ALM] must contain at least the following three pillars:

- Finding Bugs AEAP (As Early As Possibly)

- Bug Prevention by Testing Requirements and Use Cases

- Gathering and Analyzing data to do Business Continuity Predictions

*) Business Driven Test Management gives client grip on test process, uses client language, delivers appropriate test coverage on right spot and makes test results visible for client (see TMap.net for more information)

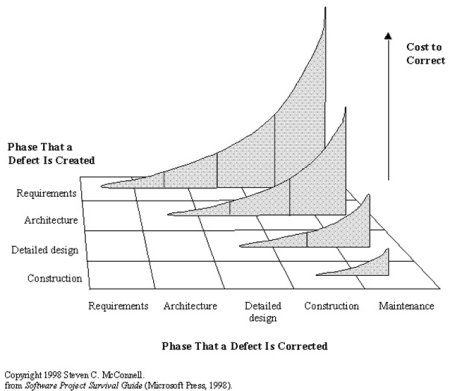

Pillar 1: Finding Bugs AEAP (As Early As Possibly)

Pillar 1: Finding Bugs AEAP (As Early As Possibly) Finding bugs as early as possible prevents changes in the software in the last phases of a project.

Recently I participated in an (early) evaluation of an organisation, where the strategy of finding bugs as early as possible was implemented for the complete software engineering department by choosing the Quality Levels, tuning the Test Coverage of the successive test levels and introducing Learning Cycles. They had geat results!

See two earlier articles on this topic:

- Testing in the Lifecycle [ALM]... a focus on test coverage,

- Finding Bugs AEAP (As Early As Possibly).

Problem solved? No, not yet, by diminishing the bugs, it became clear that the number of late change requests and/or wishes for extension of the functionality were disturbing the implementation of the applications. So it became obvious that the next pillar became more important...

Pillar 2: Bug Prevention by Testing Requirements and Use Cases.

Pillar 2: Bug Prevention by Testing Requirements and Use Cases.If software development is based on inaccurate requirements, then despite well written code, the software will be unsatisfactory. No doubt that the users do want to change the application before it is implemented. Changing an application in the last stages of a project will generate huge risks.

Testing Requirements

Testing RequirementsTesting the requirements in the early stages of the project will minimize the changes on the application just before implementation. To give the testing of requirements a head start, I derived a checklist from the article "An Early Start to Testing: How to Test Requirements (Suzanne Robertson)"

- Does each requirement have a quality measure that can be used to test whether any solution meets the requirement?

- Does the specification contain a definition of the meaning of every essential subject matter term within the specification?

- Is every reference to a defined term consistent with its definition?

- Is the context of the requirements wide enough to cover everything we need to understand?

- Have we asked the stakeholders about conscious, unconscious and undreamed of requirements? Can you show that a modeling effort has taken place to discover the unconscious requirements? Can you demonstrate that brainstorming or similar efforts taken place to find the undreamed of requirements?

- Is every requirement in the specification relevant to this system?

- Does the specification contain solutions posturing as requirements?

- Is the stakeholder value defined for each requirement?

- Is each requirement uniquely identifiable?

- Is each requirement tagged to all parts of the system where it is used? For any change to requirements, can you identify all parts of the system where this change has an effect?

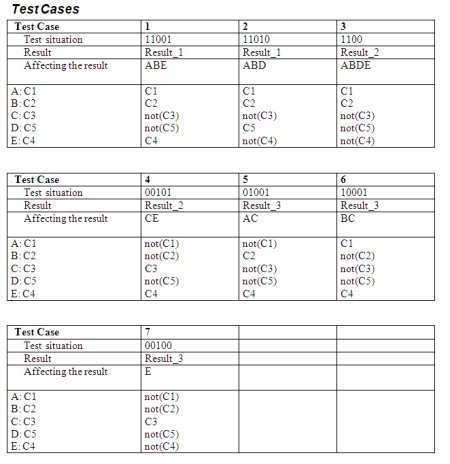

Testing Use Cases

Testing Use Cases After the requirements are tested they evolve in a functional model (for instance Use Cases) of the required application. To Test the Use Cases you can use the checklist I derived from the article: "Use Cases and Testing (Lee Copeland)"

1)Syntax Testing

- Complete:

- Are all use case definition fields filled in? Do we really know what the words mean?

- Are all of the steps required to implement the use case included?

- Are all of the ways that things could go right identified and handled properly? Have all combinations been considered?

- Are all of the ways that things could go wrong identified and handled properly? Have all combinations been considered?

- Correct:

- Is the use case name the primary actor's goal expressed as an active verb phrase?

- Is the use case described at the appropriate black box/white box level?

- Are the preconditions mandatory? Can they be guaranteed by the system?

- Does the failed end condition protect the interests of all the stakeholders?

- Does the success end condition satisfy the interests of all the stakeholders?

- Does the main success scenario run from the trigger to the delivery of the success end condition?

- Is the sequence of action steps correct?

- Is each step stated in the present tense with an active verb as a goal that moves the process forward?

- Is it clear where and why alternate scenarios depart from the main scenario?

- Are design decisions (GUI, Database, …) omitted from the use case?

- Are the use case "generalization," "include," and "extend" relationships used to their fullest extent but used correctly?

- Consistent:

- Can the system actually deliver the specified goals?

- Complete:

- Are all actors identified? Can you identify a specific person who will play the role of each actor?

- Is this everything that needs to be developed?

- Are all external system trigger conditions handled?

- Have all the words that suggest incompleteness ("some," "etc."…) been removed?

- Correct:

- Is this what you really want? Is this all you really want? Is this more than you really want?

- Consistent:

- When we build this system according to these use cases, will you be able to determine that we have succeeded?

- Can the system described actually be built?

- Complete:

- Do the use cases form a story that unfolds from highest to lowest levels?

- Is there a context-setting, highest-level use case at the outermost design scope for each primary actor?

- Correct:

- Are all the system's functional requirements reflected in the use cases?

- Are all the information sources listed?

- Consistent:

- Do the use cases define all the functionality within the scope of the system and nothing outside the scope?

- Can we trace each use case back to its requirement(s)?

- Can we trace each use case forward to its class, sequence, and/or state-transition diagrams?

Pillar 3: Gathering Data and Analyzing Trends to do Business Continuity Predictions

Pillar 3: Gathering Data and Analyzing Trends to do Business Continuity PredictionsIt is NOT possible to predict Business Continuity based on the testing process of the concerning project only. It is important to get a better foundation for the decision to implement a changed or new application. In the Rosario TAP we are doing some research for Gathering Data and Analyzing Trends to do Business Continuity Predictions from three viewpoints:

- Fault Detection Trends

- Change Control Trends (Changes in Requirements, Specifications, LOC’s,...)

- Project Control Trends (Estimations, Budget, Overtime,...)

We’ve just started on this topic, so I will keep you posted in later entries.

Collaboration is a critical success factor in preventing a major disruption caused by the implementation of a new or changed application! All parties within [ALM] have to work together in creating good test coverage from the early until the last phases of the projects. I am sure that only when the Quality Levels, Learning Cycles and Metrics are in place, a good Business Continuity risk advice can be given to ensure that an organization experiences an improved "business as usual" in the event of the implementation of new and/or changed functionality.

Collaboration is a critical success factor in preventing a major disruption caused by the implementation of a new or changed application! All parties within [ALM] have to work together in creating good test coverage from the early until the last phases of the projects. I am sure that only when the Quality Levels, Learning Cycles and Metrics are in place, a good Business Continuity risk advice can be given to ensure that an organization experiences an improved "business as usual" in the event of the implementation of new and/or changed functionality. Rob

( 2.9 / 679 )

( 2.9 / 679 )

Calendar

Calendar