Both ALM (Application Lifecycle Management) and MBT (Model Based Testing) are hot. I'm pretty busy running from customer to customer starting/supporting MBT proof of concepts and assignments. With some explanation all my contacts are convinced that the combination of ALM and MBT is THE roadmap for the future.

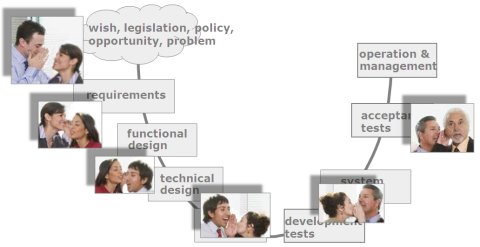

The advantages of ALM can already be implemented by choosing for the new generation of tools of Microsoft (VSTS2010) and IBM (JAZZ etc.) are presenting to strongly support the collaboration of the different roles in and around the ICT. Model Based Testing has not yet that same level of maturity. Most participants in the Model Based Testing world are very ambitious: They try to Generate Test Cases for direct automatic execution, based on test specific models.

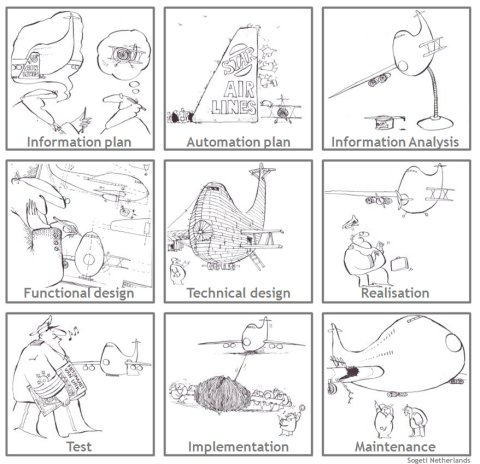

I think this is a bridge to far for most companies using for instance mainly ERP-software. In the ERP-scope most of the testing is still done manually, and jumping to this ultimate form of Model Based Testing (Generate Test Cases for direct automatic execution) will be very tricky and/or costly. That's why we (Sogeti) are introducing also a basic form of Model Based Testing (STaaS/COVER), using the same models as the developers do.

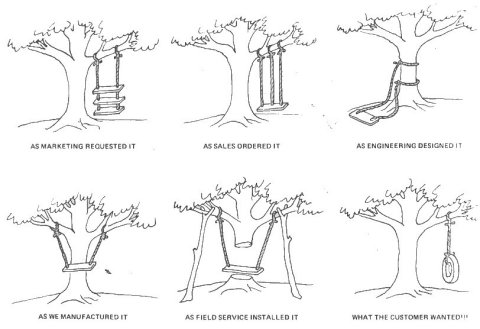

"MBT: ...a bridge to far..."

MBT: Generate Test Cases for direct automatic execution

Does this mean that Generating automated tests is a dead end? NO! It is a very good approach for testing high risk functionalities, which are to risky or to complex to test manually.

Making test specific models is a costly business and top designers/test engineers are needed to make it work. So be sure to pinpoint these heavy coverage and tooling only on the high risk areas. AND!! Do not try to make the test specific models in the Testing Silo! Look for collaboration with the design department to get these test specific models in the same configuration mnagement as the models the devlopers use to build the software. Preferably in an "ALM Center Configuration Management".

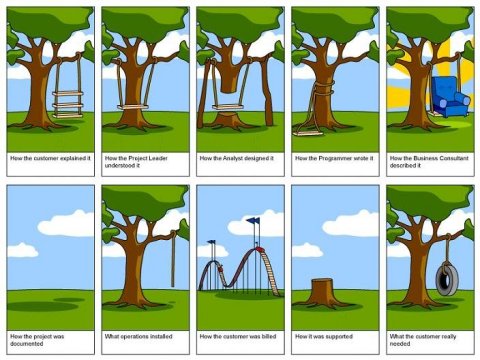

MBT-basic: Using the same models as the developers do

On the other hand, when the ambition of Model Based Testing is lowered to only generating the test cases, it is a complete different story. Especially the generation of the (more global) logical test cases can be done from the same (functional and/or requirement) models the developers use as a bases for creating the software. For instance process flows (activity diagrams), decision tables and pseudo code are a perfect basis for generating (logical) test cases.

Enrichment of these models (in collaboration with the design department) will bring us to the next step: Generating the physical test cases. In that case the Test Cases are no longer Configuration Items (costly maintenance!) but Test Cases become Work Items (if desired: directly generated from the same models the developers use to build the software).

Rob Kuijt

The advantages of ALM can already be implemented by choosing for the new generation of tools of Microsoft (VSTS2010) and IBM (JAZZ etc.) are presenting to strongly support the collaboration of the different roles in and around the ICT. Model Based Testing has not yet that same level of maturity. Most participants in the Model Based Testing world are very ambitious: They try to Generate Test Cases for direct automatic execution, based on test specific models.

I think this is a bridge to far for most companies using for instance mainly ERP-software. In the ERP-scope most of the testing is still done manually, and jumping to this ultimate form of Model Based Testing (Generate Test Cases for direct automatic execution) will be very tricky and/or costly. That's why we (Sogeti) are introducing also a basic form of Model Based Testing (STaaS/COVER), using the same models as the developers do.

"MBT: ...a bridge to far..."

MBT: Generate Test Cases for direct automatic execution

Does this mean that Generating automated tests is a dead end? NO! It is a very good approach for testing high risk functionalities, which are to risky or to complex to test manually.

Making test specific models is a costly business and top designers/test engineers are needed to make it work. So be sure to pinpoint these heavy coverage and tooling only on the high risk areas. AND!! Do not try to make the test specific models in the Testing Silo! Look for collaboration with the design department to get these test specific models in the same configuration mnagement as the models the devlopers use to build the software. Preferably in an "ALM Center Configuration Management".

MBT-basic: Using the same models as the developers do

On the other hand, when the ambition of Model Based Testing is lowered to only generating the test cases, it is a complete different story. Especially the generation of the (more global) logical test cases can be done from the same (functional and/or requirement) models the developers use as a bases for creating the software. For instance process flows (activity diagrams), decision tables and pseudo code are a perfect basis for generating (logical) test cases.

Enrichment of these models (in collaboration with the design department) will bring us to the next step: Generating the physical test cases. In that case the Test Cases are no longer Configuration Items (costly maintenance!) but Test Cases become Work Items (if desired: directly generated from the same models the developers use to build the software).

Rob Kuijt

( 3 / 786 )

( 3 / 786 )

Calendar

Calendar